You have /5 articles left.

Sign up for a free account or log in.

It was bound to make headlines: a study claiming that a computer could predict if someone is gay or straight, based on a photo. But the story has moved beyond headlines, to gay rights advocates charging that the study is scientifically invalid and an author of the paper saying he’s under unfair personal attack.

“What really saddens me is that LGBTQ rights groups, [Human Rights Campaign] and GLAAD, who strived for so many years to protect rights of the oppressed, are now engaged in a smear campaign against us with a real gusto,” Michal Kosinski, an assistant professor of organizational behavior at Stanford University, wrote on Facebook about the backlash against his work.

Some background: Kosinski, a psychologist and data scientist, and Yilun Wang, a computer scientist who studied at Stanford, used tens of thousands of pictures from dating sites and corresponding information about sexual preferences to create an algorithm to predict someone’s sexual orientation.

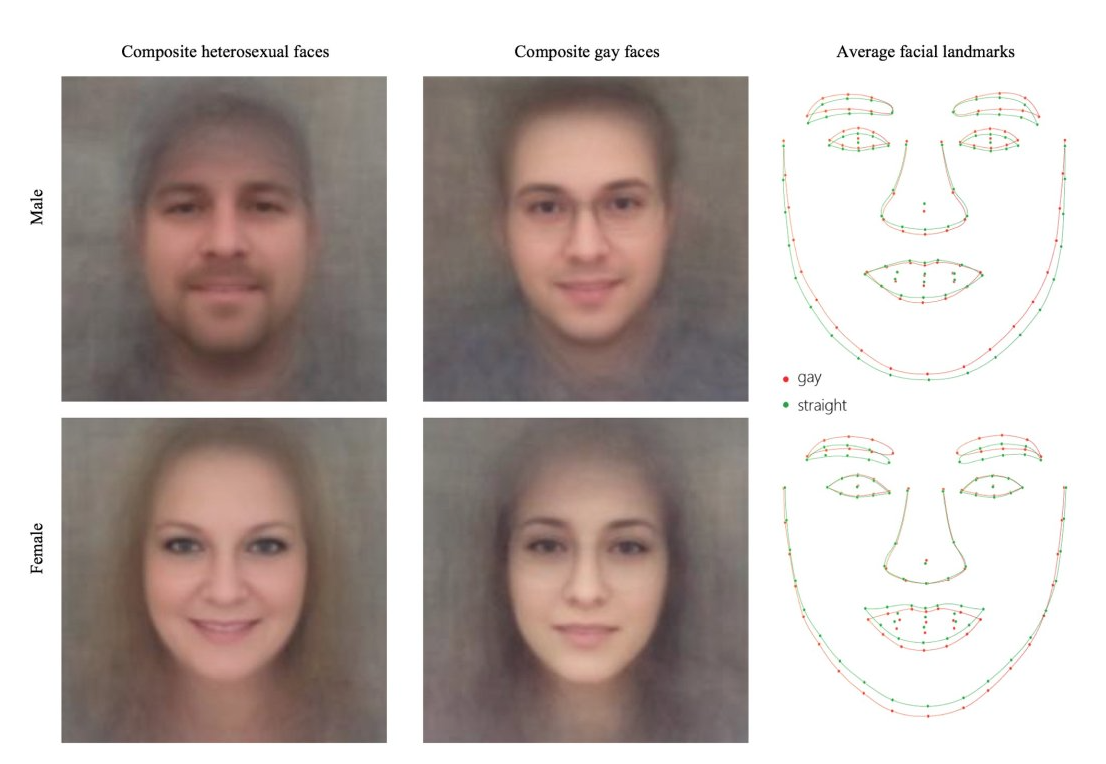

The computer’s eventual prediction-success rate based on a single photo was 81 percent for male faces and 71 percent for female faces. Significantly, though, the computer was choosing between two photos, one of a person who self-identified as gay and one who identified as straight; contrary to some reports about the study, the computer wasn't looking at a photo and simply saying whether the person was gay or not.

Loading five photos of a person pushed the success rate to 91 percent for men and 83 percent for women. The program also found that gay men’s faces were more “feminine,” with more “gender-atypical” features than straight men’s, and that lesbians’ faces were more “masculine” than straight women’s.

Source: Michal Kosinski via Twitter

That’s a much better success rate than humans trying to exercise their gaydar, according to the paper, and thus, the artificial intelligence was technically successful. Yet the development of such a technology prompts dystopian but essential questions, such as how it could potentially be used to discriminate against people.

Kosinski has previously written extensively on personal data and privacy, and his new study recalls some of that work in saying that “digitalization of our lives and rapid progress in AI continues to erode the privacy of sexual orientation and other intimate traits. ...We did not create a privacy-invading tool, but rather showed that basic and widely used methods pose serious privacy threats." The study also notes the potential for diminished accuracy outside its parameters, but it does say the computer was relatively successful in predicting who was gay or straight in photos taken not only from dating websites but from Facebook (that part of the study involved comparing photos of male, gay Facebook users with photos of straight dating site users).

Still, critics seized on the paper, accusing the authors not just of entering an ethically gray research area but of shoddy science. Criticism from GLAAD and the HRC in particular prompted Kosinski’s comments on social media.

“Let’s be clear: our paper can be wrong,” Kosinski said in his post. “In fact, despite evidence to the contrary, we hope that it is wrong. But only replication and science can debunk it -- not spin doctors.”

Among other actions, GLAAD and HRC published a news release urging Stanford and “responsible” news media to “expose dangerous and flawed research that could cause harm to LGBTQ people around the world.” The release says that the paper -- among other alleged flaws -- makes “inaccurate assumptions,” categorically leaves out nonwhite subjects and has not been peer reviewed.

The study has in fact been peer reviewed and is forthcoming in the Journal of Personality and Social Psychology. But the sample is all white. The researchers say they could not find enough non-white gay participants to expand the group.

Jim Halloran, GLAAD’s chief digital officer, asserted in a statement that technology cannot determine whether someone is gay or straight. Rather, he said, this particular technology recognizes a “pattern that found a small subset of out white gay and lesbian people on dating sites who look similar. Those two findings should not be conflated.” Less than research or news, he added, the “reckless” study is a “description of beauty standards on dating sites that ignores huge segments of the LGBTQ community, including people of color, transgender people, older individuals and other LGBTQ people who don’t want to post photos on dating sites.”

Calling on Stanford to distance itself from “junk science,” Ashland Johnson, director of public education and research for the HRC, said the paper is “dangerously bad information that will likely be taken out of context” and threatening to “the safety and privacy of LGBTQ and non-LGBTQ people alike.”

GLAAD and HRC said they participated in a conference call with the reserachers and representatives of Stanford months ago. They tried to raise their concerns about “overinflating” results, or their significance, at the time, to no avail, the groups said.

Kosinski was not immediately available for comment Monday.

Rich Ferraro, a spokesperson for GLAAD, called the allegation of a smear campaign as “sensational as the supposed findings of this research.”

Academic Freedom, Activism and AI

Especially in the era of social media, scientists can expect strong reactions to research in controversial areas. But is the reaction to this paper in particular, by so many non-academics, concerning for academic freedom? For a number of scholars, the answer was no.

Paul Pfleiderer, senior associate dean for academic affairs at Stanford’s Graduate School of Business, said in an emailed statement that the study is peer-reviewed research and pending in an academic journal that’s a publication of the American Psychological Association.

Getting published in peer-reviewed research journals, he said, “allows the interpretation of those findings and the research methodologies used to obtain them to be scrutinized by academics in the field and are appropriately a matter for discussion and debate.”

John K. Wilson, an independent scholar of academic freedom and co-editor of the American Association of University Professors’ “Academe” blog, said that anyone is “free to criticize a professor's research, even if those objections attack the media coverage more than the study itself.” It’s not a threat to academic freedom “unless an administration takes action to investigate or punish professors for their research,” he added.

Philip Cohen, a professor of sociology at the University of Maryland at College Park, said that scholars today “have to expect blowback when their research becomes public,” since academic research no longer “operates in a separate space from public discourse.”

Universities need to protect researchers whose work is attacked, he added, and “to earn that respect, researchers need to behave ethically as well as transparently.”

That said, Cohen had his own qualms about the study, or rather how it draws its conclusions. First, he said, all research on what “makes” people straight or gay is weak, since there is no way to measure people’s sexual orientation. That orientation can change over the span of one’s life and depending on social context, and some people who are attracted to or who sleep with others of the same sex don’t identify as homosexual, he said.

Cohen also disagreed with the authors’ suggestion that their findings support prenatal hormone theory, or the idea that exposure to certain hormones in utero can affect one’s lifelong sexual orientation. That's because the study involved a “very” gay sample of people who “are out and looking, and choosing photographs with that aim in mind,” he said. In other words, it's not a good basis for making generalizations about what biologically “causes” one to be gay. (Greggor Mattson, associate professor of sociology at Oberlin College, summed up that point in a post to the Scatterplot blog: "AI Can’t Tell If You’re Gay … But It Can Tell If You’re a Walking Stereotype." Kate Crawford, a distinguished research professor at New York University who studies AI and social impact, on Twitter said the study amounted to more "AI phrenology.")

Perhaps most crucially, Cohen said the study doesn’t make clear enough that the computer is always choosing between two people -- one gay and one not gay. So it isn’t saying someone is gay, but rather who is more likely gay, if forced to choose.

“There aren’t many real-world situations like that,” he said. “They never say how accurate their model is at identifying individual gay people when there is no straight person for comparison.”

As for ethics, Cohen said the research topic wasn’t off-limits, but that it would have been good for the Kosinski and Wang to declare somewhere the paper itself that they wouldn’t help people use their work to identify others without their consent.

Max Tegmark, author of Life 3.0: Being Human in the Age of Artificial Intelligence and scientific director of the Foundational Questions Institute at the Massachusetts Institute of Technology, said Monday that many of his gay friends “claim to have pretty good gaydar, so it’s obviously possible for AI to do the same.”

While he saw nothing scientifically wrong with the new analysis, he said, “the question of whether certain applications of AI are ethically wrong is crucial and should be debated.” For example, he asked, “What if a repressive regime uses such techniques to identify and discriminate against gays? What if lethal autonomous weapons use similar techniques to kill only people of a certain ethnicity or sexual orientation? For those who think we don’t need to discuss the societal implications of AI, this paper should be a wake-up call.”